The relentless cadence of strategic moves in computational power is cutting through the noise of the 2025 AI industry. Recently, two semiconductor behemoths secured billion-dollar computing orders with leading AI enterprises. One is leveraging its technological supremacy to dominate emerging sectors, while the other is carving a path forward through an equity alliance. These divergent strategies are bringing the intense global contest for dominance in the core AI chip market into sharp relief.

The Leading AI Chip Architects and Their Divergent Bets

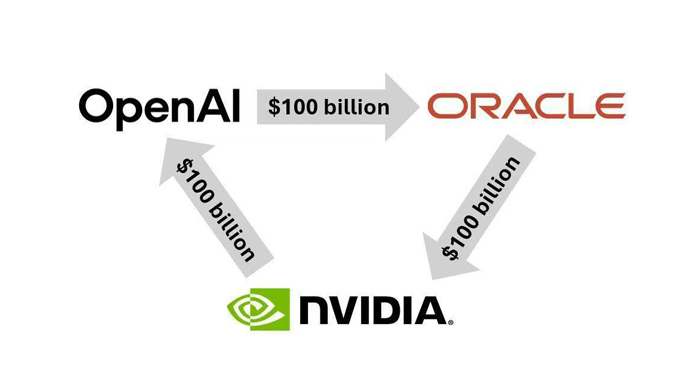

In September, NVIDIA and OpenAI announced a strategic partnership of historic proportions. OpenAI is set to deploy at least 10 GW (Gigawatts) of NVIDIA's systems, encompassing millions of GPUs. In return, NVIDIA has committed to investing up to $100 billion in OpenAI as this computational power comes online.

10 GW of computing power translates to approximately 4-5 million GPUs. This single order is projected to consume NVIDIA's entire AI chip production capacity for 2025, which is double the total shipments from the previous year. Furthermore, the two companies plan to deploy the first GW-scale data center based on NVIDIA's Vera Rubin platform in the second half of 2026.

Source from Internet

This collaboration is pivotal for both entities. Jensen Huang, NVIDIA's CEO, hailed it as the "largest computing project in history" and the "largest AI infrastructure project ever." Sam Altman of OpenAI stated that without this infrastructure, OpenAI could neither deliver the services users demand nor continue building superior models. He described these resources as the essential "fuel" powering OpenAI's model improvements and revenue growth.

In early October, OpenAI and AMD inked another monumental agreement. OpenAI will deploy up to 6 GW of AMD Instinct GPUs over several years, with the first 1 GW slated for 2026. The core electronic components involved are from the AMD Instinct MI450 series, extending to the MI350X and future generations.

A unique aspect of this deal is the issuance of up to 160 million stock warrants to OpenAI by AMD. These warrants are tied to chip deployment milestones and AMD's stock price. If fully exercised, OpenAI could acquire roughly a 10% stake in AMD. This equity-based bond effectively transforms OpenAI and AMD into a "community of shared interests."

What are the respective implications of these deals for NVIDIA and AMD?

The Ripple Effects on NVIDIA and AMD

The most immediate impact is the direct financial windfall for both companies.

Source from Internet

Jensen Huang indicated on an earnings call that constructing 1 GW of computing capacity costs between $50-60 billion, with NVIDIA's chips and systems accounting for about $35 billion of that. Excluding this, the 10 GW data center project represents a total investment of $500-600 billion—on par with the previously announced "Stargate" project, from which NVIDIA stands to gain approximately $350 billion in revenue.

Lisa Su, CEO of AMD, projected that the agreement would generate tens of billions of dollars in additional annual revenue for AMD over the next four years. She also anticipated a "bandwagon effect," attracting other clients and potentially pushing AMD's total new revenue past $100 billion.

Beyond direct earnings, these partnerships have profound implications for the broader AI market. Industry insiders highlight several key areas:

Pricing Power: NVIDIA's formidable pricing leverage may face dilution. By partnering with AMD, OpenAI gains bargaining power, potentially forcing NVIDIA to reduce premiums on future orders.

Technology Co-development: The OpenAI-AMD partnership transcends a simple buyer-supplier relationship. OpenAI will provide direct design feedback on multiple generations of AMD's Instinct GPUs, from the MI300X to MI450 and MI350X. This establishes a framework for joint hardware-software R&D. The two companies will share product roadmaps and collaboratively optimize GPU architecture, memory bandwidth, AI accelerators, and AMD's ROCm software ecosystem. This deep collaboration could directly challenge NVIDIA's synergistic "hardware-software" advantage.

Market Validation and Access: The core value of the tens of billions in annual revenue from OpenAI for AMD lies in the powerful "customer endorsement." This has already spurred OEMs like Dell and Lenovo to accelerate mass production of MI300X systems, and cloud providers like Microsoft and Oracle are following with purchases. Lisa Su recently stated that the AI boom is expected to last a decade, with 2025 being only its second year. AMD's close tie-up with OpenAI secures a crucial market entry point for the next eight years of concentrated computing demand.

Market Tremors: Identifying the Beneficiaries

While the spotlight is on the chip designers, the implications of these bets extend far beyond.

Chip Manufacturing & Packaging: AMD and NVIDIA rely on TSMC for manufacturing and advanced packaging like CoWoS. These massive orders will perpetually strain and drive demand for TSMC's cutting-edge processes and packaging capacity. Other packaging and testing (OSAT) firms like ASE Group and Amkor will also benefit from the soaring demand for AI chips. Reportedly, ASE, the world's largest independent OSAT, is rapidly expanding its advanced packaging capacity in Kaohsiung, boosting production for CoWoS, SoIC, and FOPLP.

Tongfu Microelectron: A company less in the limelight but indispensable as a "behind-the-scenes champion" for AMD is Tongfu Microelectron. It has formed a joint venture and strategic partnership with AMD, signing long-term agreements to provide packaging and testing services for AMD's AI PC chips and AI accelerators used for training and inference. Tongfu is AMD's largest packaging and testing supplier, with AMD accounting for over 60% of its order revenue in the first half of 2025. AMD also holds a 15% stake in Tongfu's plants in Penang and Suzhou. The OpenAI-AMD deal directly benefits Tongfu Microelectronics.

Memory (HBM): High-Bandwidth Memory (HBM) is a critical electronic component for NVIDIA and AMD GPUs. Key players like SK Hynix, Micron, and Samsung are prime beneficiaries of this industry upswing. Notably, recent market reports suggest NVIDIA has largely confirmed that its latest GB300 AI chip will utilize Samsung's 5th-gen HBM3E, indicating Samsung's successful entry into NVIDIA's supply chain after previous challenges.

Servers & Data Centers: Server manufacturers like Dell and Supermicro, which provide integrated solutions, will see a surge in orders. The need for high-speed networking within data centers will also grow, benefiting companies like Arista Networks as AI data centers upgrade to 400/800 Gigabit Ethernet.

Power & Cooling: GW-scale compute clusters place extreme demands on power delivery and cooling. This is a direct boon for data center infrastructure suppliers like Vertiv, Schneider Electric, and Eaton, specializing in power distribution and liquid cooling solutions.

OpenAI's Third Path: The Pursuit of Self-Reliance

While forging alliances with GPU giants, OpenAI is actively pursuing a third path: developing its own AI chips.

Since last year, OpenAI has been collaborating with Broadcom on its first custom AI inference chip, designed to handle its massive AI workloads, particularly inference tasks. Broadcom is assisting with the chip design, and production is slated to begin at TSMC in 2026. The chip is expected to utilize TSMC's 3nm and subsequent 1.6nm processes.

OpenAI had previously explored building its own foundry network but shelved the plan due to prohibitive costs and lengthy timelines, opting instead to focus on internal chip design.

This strategy is significant for two main reasons:

Reducing Dependency and Cost: As one of the largest buyers of NVIDIA GPUs, OpenAI has faced constraints due to chip shortages, supply delays, and the immense cost of training models. Sam Altman has publicly expressed frustration over GPU scarcity hindering AI development. In-house chip design is a strategic move to gain autonomy, mitigate supply chain risks, and potentially lower long-term costs.

Competitive Landscape: OpenAI is not alone. Other tech giants like Meta (with its MTIA series) and Microsoft (with Azure Maia 100) have also introduced custom AI chips. Amazon's in-house Trainium chips are reported to offer 30-40% better price-performance than other GPU suppliers. Self-reliance is becoming a key competitive differentiator.

However, in-house chip development and AMD's advancements are unlikely to challenge NVIDIA's market dominance in the short term.

According to a recent Susquehanna analysis, NVIDIA currently commands a staggering 80% market share in AI GPUs. While competition will intensify, the firm predicts NVIDIA will still hold a 67% share by 2030, underscoring its resilient industry position. AMD is forecasted to capture about 4% of the market by 2030, but its annual revenue could grow from $6.3 billion to $20 billion. Meanwhile, Broadcom, by focusing on custom ASICs, is projected to seize a 14% market share with revenue reaching $60 billion by 2030, a significant increase from $14.5 billion today. The strategic game for AI computational supremacy, driven by relentless innovation in AI chips and critical electronic components, is far from over.